Let’s cut to the chase: Interview preparation is one of the best things an institution can do to ensure a successful accreditation outcome.

Preparing for an accreditation site visit is always stressful for higher education faculty and staff, even under the best of circumstances. Depending on whether it’s a regional (institutional) accrediting body, a state compliance audit, or a programmatic accreditor, there are certain processes and procedures that must be followed. While each body has its own nuances, there’s one thing institutions should do to prepare, and that is to help their interviewees prepare. This piece will focus helping educator preparation programs prepare for a Council for the Accreditation of Educator Preparation (CAEP) site visit.

Important note: The guidance below focuses exclusively on the final months and weeks leading up to a site visit. The actual preparation begins approximately 18 months before this point, when institutions typically start drafting their Self-Study Report (SSR).

2-4 Months Prior to the Site Visit

Approximately 2-4 months prior to a site visit, the CAEP team lead meet virtually with the educator preparation program (EPP) administrator(s) and staff. Sometimes, representatives of that state’s department of education will participate. By the end of this meeting, all parties should be “on the same page” and should be clear regarding what to expect in the upcoming site visit. This includes a general list of who the team will likely want to speak with when the time comes.

A Word About Virtual and/or Hybrid Site Reviews

The onset of Covid-19 precipitated a decision by CAEP to switch from onsite reviews to a virtual format. Virtual or hybrid virtual site reviews require a different type of preparation than those that are conducted exclusively onsite. I think the more we start to see Covid in the rearview mirror, the more accreditors may start to gradually ease back into onsite reviews, or at least a hybrid model. I provided detailed guidance for onsite reviews in a previous post.

CAEP has assembled some very good guidelines for hosting effective accreditation virtual site visits, and I recommend that institutional staff familiarize themselves with those guidelines well in advance of their review.

Interviews: So Important in a CAEP Site Visit

Regardless of whether a site visit is conducted on campus or virtually, there’s something very common:

Regardless of whether a site visit is conducted on campus or virtually, there’s something very common:

An institution can submit a stellar Self-Study Report and supporting pieces of evidence, only to fail miserably during the site review itself. I’ve seen this happen over and over again. Why? Because they don’t properly prepare interviewees. Remember that the purpose of site visit interviews is twofold:

First, site team reviewers need to corroborate what an institution has stated in their Self-Study Report, Addendum, and supporting pieces of evidence. In other words:

Is the institution really doing what they say they’re doing?

For example, if the institution has stated in their written documents that program staff regularly seek out and act on recommendations from their external stakeholders and partners, you can almost bet that interviewees will be asked about this. Moreover, they’ll be asked to cite specific examples. And they won’t just pose this question to one person. Instead, site team reviewers will attempt to corroborate information from multiple interviewees.

Second, site team reviewers use interviews for follow-up and answering remaining questions that still linger after reading the documents that were previously submitted. So for example, if both the Self-Study Report and the Addendum didn’t provide sufficient details regarding how program staff ensure that internally created assessments meet criteria for quality, they will make that a focus in several interviews.

In most instances, the site team lead will provide a list of individuals who can respond accurately and confidently to team members’ questions. Within the educator preparation landscape, typical examples include:

However, I had seen instances where the team lead asks the institution to put together this list. Staff need to be prepared for either scenario.

Mock Visits: Essential to Site Review Interview Preparation

Just as you wouldn’t decide a month in advance that you’re going to run a marathon when the farthest you’ve been walking is from the couch to the kitchen, it’s to an institution’s peril if they don’t fully prepare for an upcoming site visit regardless of whether it’s onsite, virtual, or hybrid.

Just as you wouldn’t decide a month in advance that you’re going to run a marathon when the farthest you’ve been walking is from the couch to the kitchen, it’s to an institution’s peril if they don’t fully prepare for an upcoming site visit regardless of whether it’s onsite, virtual, or hybrid.

I’ve come to be a big believer in mock visits. When I first started working in compliance and accreditation many years ago, I never saw their value. Truthfully, I saw them as a waste of time. In my mind, while not perfect, our institution was doing a very good job of preparing future teachers. And, we had submitted a Self-Study Report and supporting pieces of evidence which we believed communicated that good work. We took great care in the logistics of the visit and when the time came, we were filled with confidence about its outcome. There was one problem:

We didn’t properly prepare the people who were going to be interviewed.

During site visits, people are nervous. They’re terrified they’ll say the wrong thing, such as spilling the beans about something the staff hopes the site team reviewers won’t ask about. It happens. Frequently.

When we’re nervous, some talk rapidly and almost incoherently. Some won’t talk at all. Others will attempt to answer questions but fail to cite specific examples to back up their points. And still others can be tempted to use site visit interviews as an opportunity to air their grievances about program administrators. I’ve seen each and every one of these scenarios play out.

This is why it’s critical to properly prepare interviewees for this phase of the program review. And this can best be done through a mock site visit. Another important thing to keep in mind is that the mock visit should mirror the same format that site team members will use to conduct their program review. In other words, if the site visit will be conducted onsite, the mock visit should be conducted that same way. If it’s going to be a virtual site visit, then the mock should follow suit.

Bite the bullet, hire a consultant, and pay them to do this for you.

It simply isn’t as effective when this is done in-house by someone known in the institution. A consultant should be able to generate a list of potential questions based on the site team’s feedback in the Formative Feedback Report. In addition to running a risk assessment, a good consultant should be able to provide coaching guidance for how interviewees can communicate more effectively and articulately. And finally, at the conclusion of the mock visit, they should be able to provide institutional staff with a list of specific recommendations for what they need to continue working on in the weeks leading up to the site visit in order to best position themselves for a positive outcome.

If you’re asking if I perform this service for my clients, the answer is yes. There is no downside to preparation, and I strongly encourage all institutions to incorporate this piece into their planning and budget.

While the recommendations above may feel exhausting, they’re not exhaustive. I’ve touched on some of the major elements of site visit preparation here but there are many more. Feel free to reach out to me if I can support your institution’s CAEP site visit effort.

###

About the Author: A former public school teacher and college administrator, Dr. Roberta Ross-Fisher provides consultative support to colleges and universities in quality assurance, accreditation, educator preparation and competency-based education. Specialty: Council for the Accreditation of Educator Preparation (CAEP). She can be reached at: Roberta@globaleducationalconsulting.com

Top Graphic Credit: Pexels

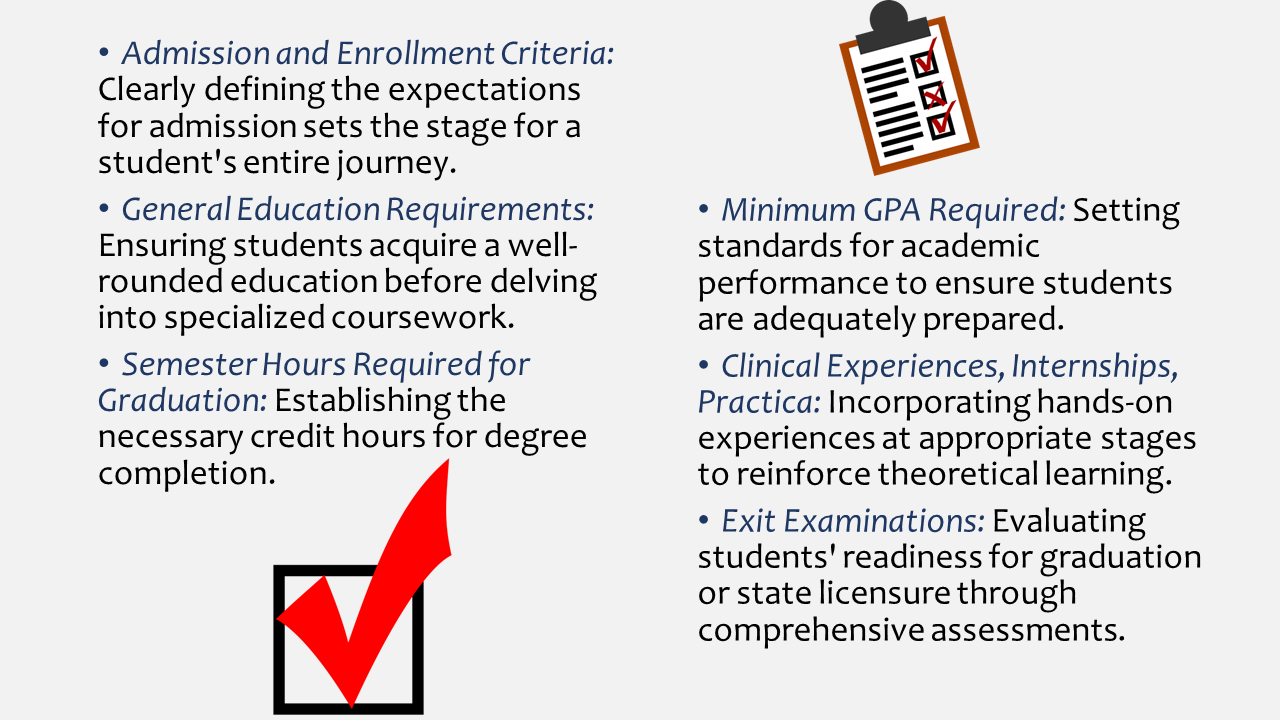

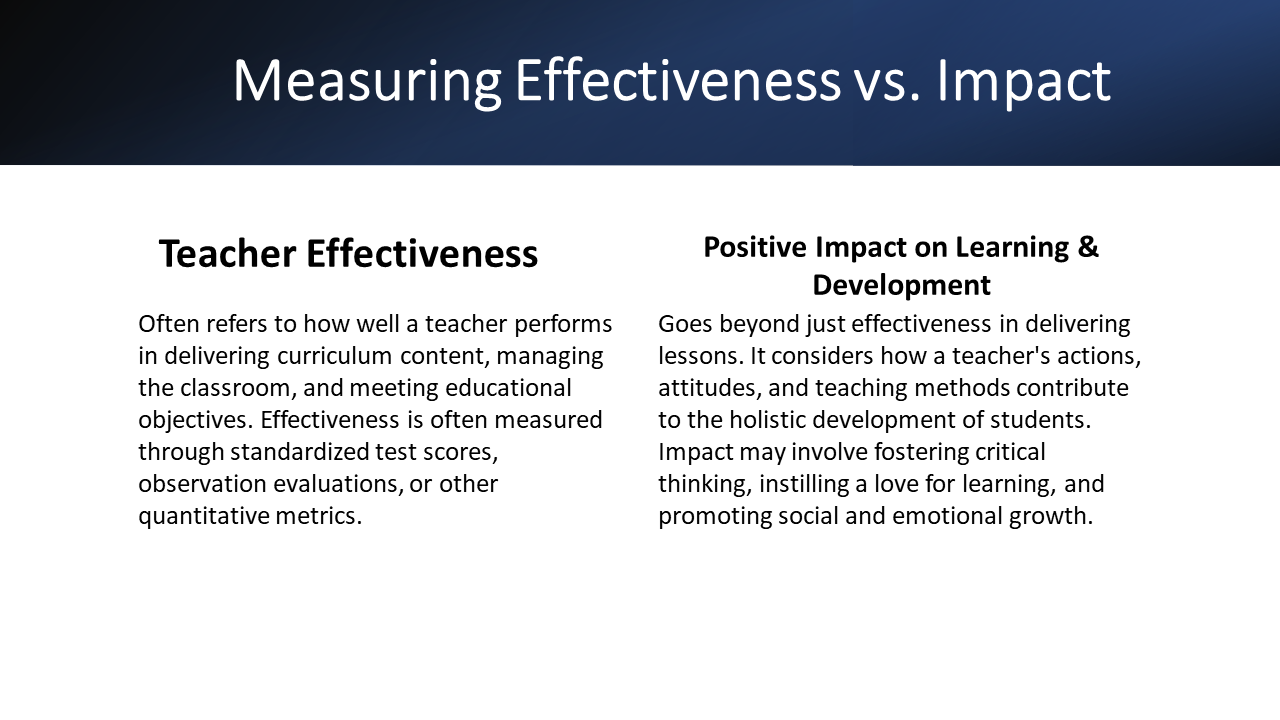

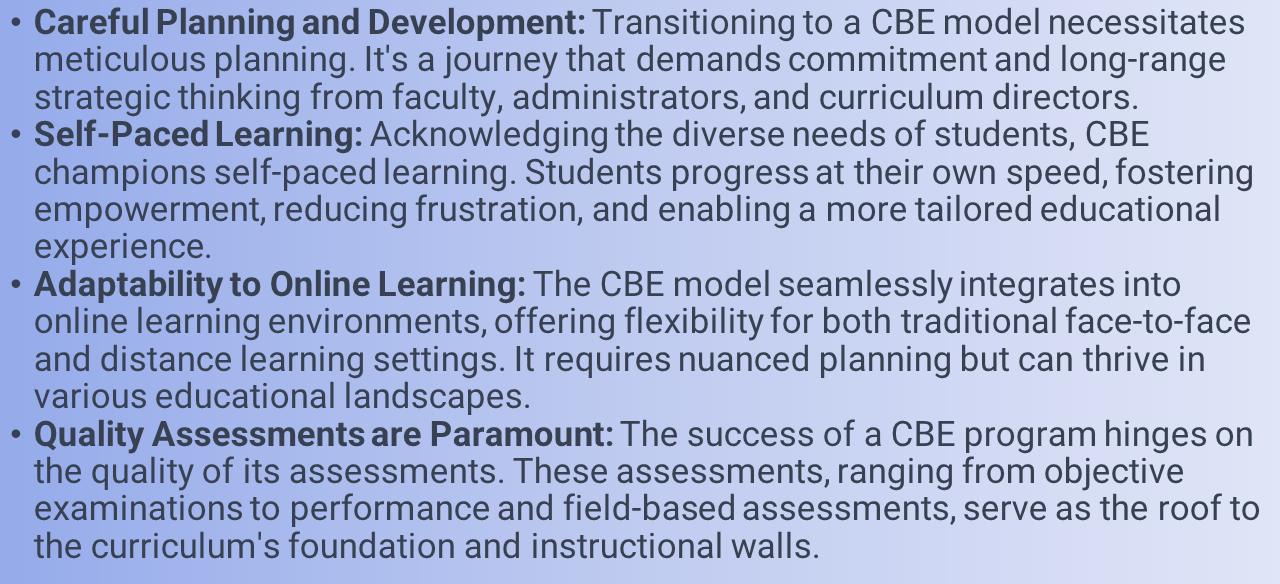

In a CBE, students showcase their knowledge and skills through a variety of high-quality formative and summative assessments. This approach shifts the focus from traditional testing to a more comprehensive evaluation of a student’s true understanding and application of concepts.

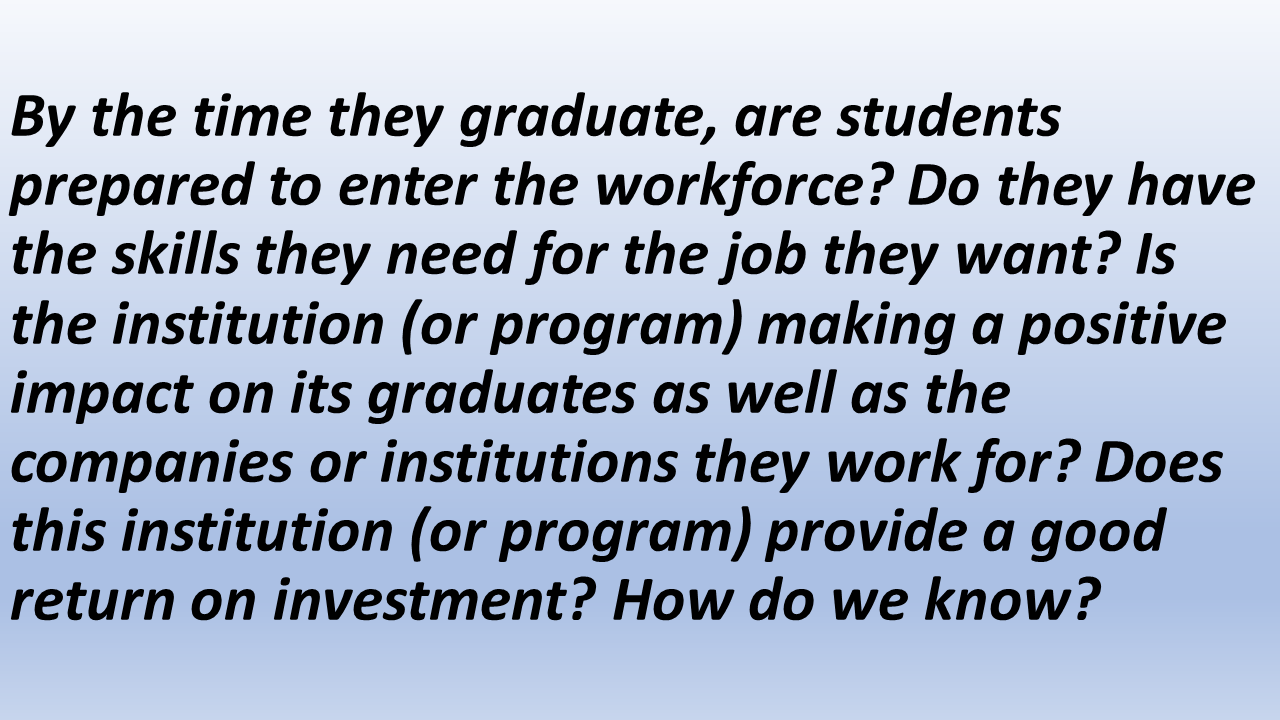

In a CBE, students showcase their knowledge and skills through a variety of high-quality formative and summative assessments. This approach shifts the focus from traditional testing to a more comprehensive evaluation of a student’s true understanding and application of concepts. Initiating CBE at the college or university level requires a comprehensive institutional commitment. This commitment involves a paradigm shift in the faculty model, changes in registration and scheduling processes, and adaptations to student support services. Here are a few practical tips to navigate these challenges:

Initiating CBE at the college or university level requires a comprehensive institutional commitment. This commitment involves a paradigm shift in the faculty model, changes in registration and scheduling processes, and adaptations to student support services. Here are a few practical tips to navigate these challenges: While the transition to Competency-Based Education may present challenges, the benefits are substantial. It provides a pathway for institutions to meet the needs of a diverse student population, acknowledging the rich experiences that learners bring to the table. Moreover, the flexibility of CBE can be a strategic advantage in attracting a broader range of students.

While the transition to Competency-Based Education may present challenges, the benefits are substantial. It provides a pathway for institutions to meet the needs of a diverse student population, acknowledging the rich experiences that learners bring to the table. Moreover, the flexibility of CBE can be a strategic advantage in attracting a broader range of students.